Emerging threats

We support organisations striving to build a trustworthy, safe online environment where users can engage authentically in their communities.

Cross-sector corporatesWe support international government organisations and NGOs working to provide infrastructure or improve the capabilities, security and resilience of their nation.

International programmes and developmentWe support commercial organisations operating in a digital world, seeking to protect their reputation and prevent business disruption caused by cyber attacks and compliance breaches.

UK government and public sectorWe support UK government organisations responsible for safeguarding critical infrastructure, preserving public trust, and maintaining national security.

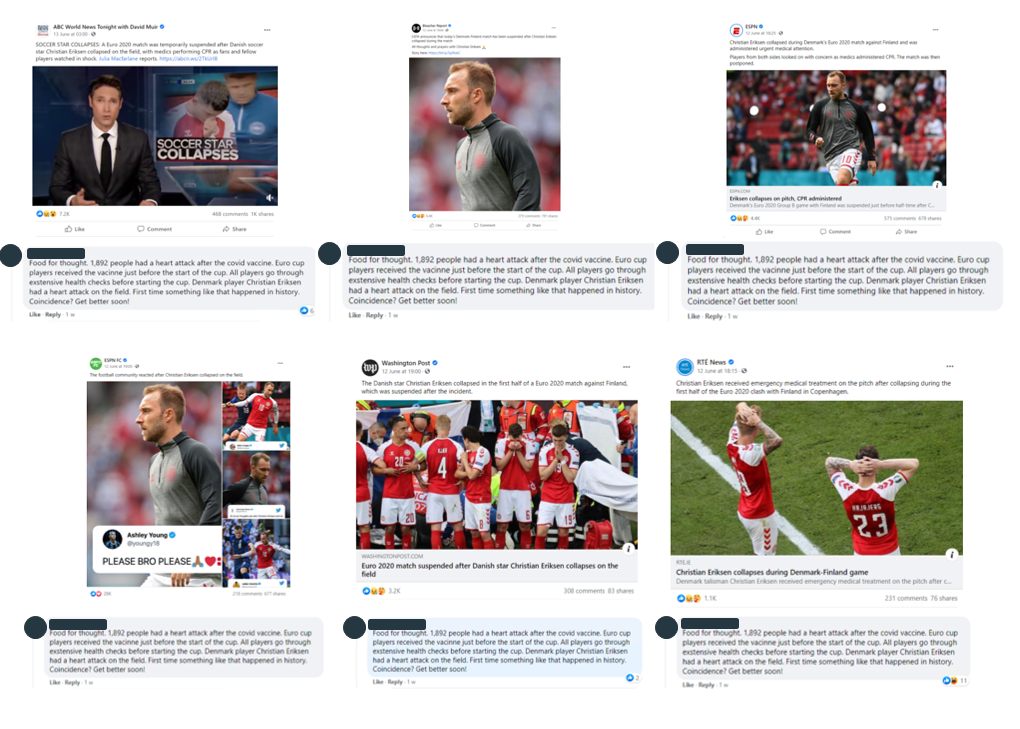

In the aftermath of Christian Eriksen’s collapse and subsequent cardiac arrest, PGI uncovered coordinated efforts on social media to amplify false claims that the incident was caused by a COVID-19 vaccine. English, Dutch, German and Danish Twitter trolls have been repeatedly sharing misleading content published by British and American conspiracy theory websites, while users on Facebook have spammed football pages with copy-and-pasted statements falsely claiming that Eriksen collapsed because he had received the Pfizer jab a matter of days before Denmark’s match against Finland. In this piece, I explore the infrastructure that has enabled this disinformation to spread.

Media outlets and football pages across social media swiftly expressed their concern for Christian Eriksen after his collapse during Denmark’s football match against Finland on 12 June. Unfortunately, the event also sparked a different kind of reaction. A mere 11 minutes after Eriksen’s collapse, a Facebook user with one listed friend took to one of these posts, made by the Bleacher Report Football page on Facebook, to exclaim “Vaccine is not good!!!?”.

This user was not alone in suggesting that Eriksen’s collapse was caused by a COVID-19 vaccine. PGI observed that other social media users on Facebook, Instagram, YouTube and Twitter were quick to claim—without evidence—that Eriksen had allegedly received a vaccine days before Denmark’s match against Finland.

Early fact-checking attributed the disinformation to a fringe physicist and blogger called Luboš Motl, who falsely claimed in a tweet that Eriksen had specifically received the Pfizer vaccine. Motl’s tweet, which had subsequently been reshared by other anti-vaccination figures, including Alex Berenson, was removed after Inter Milan director Giuseppe Marotta confirmed that Eriksen had not received any COVID-19 vaccine prior to the tournament.

Unfortunately, this did not prevent the continuing onslaught of vaccine related disinformation.

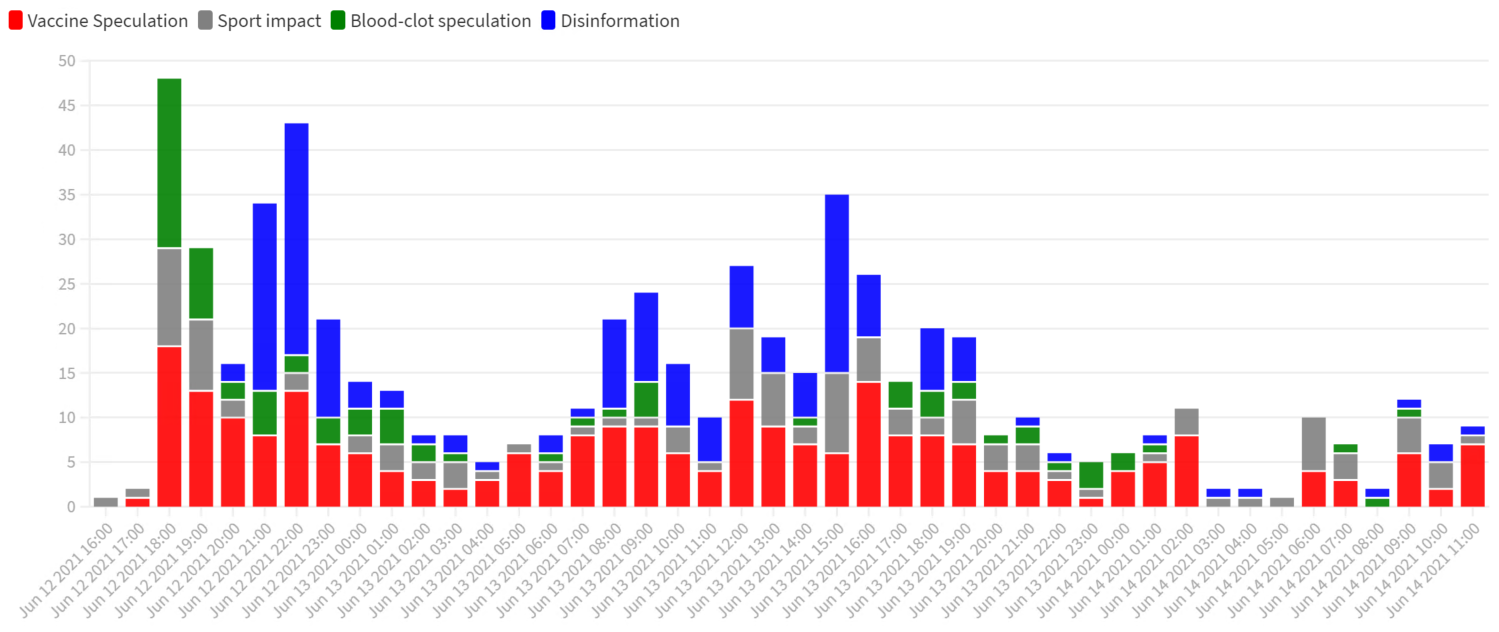

Very shortly after Eriksen’s collapse, there was a lot speculation about the cause, questioning whether it could have been due to a blood-clot, or more specifically a blood-clot caused by a COVID-19 vaccine. Discourse during this period was less direct, consisting predominantly of questions, rather than outright assertions. There were also questions about what this event would mean for the match, the tournament, and the sport.

This questioning and speculation created a fertile ground for more proactive individuals and malign media entities to spread false information. In fact, the speed at which the information environment became dominated by vaccine-scepticism shows both how widespread vaccine misinformation is among social media users, and how quickly that scepticism can be exploited by opportunistic threat-actors engaged in coordinated inauthentic behaviour.

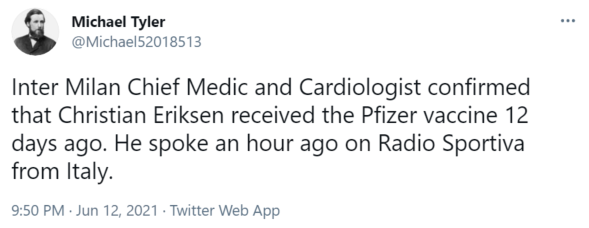

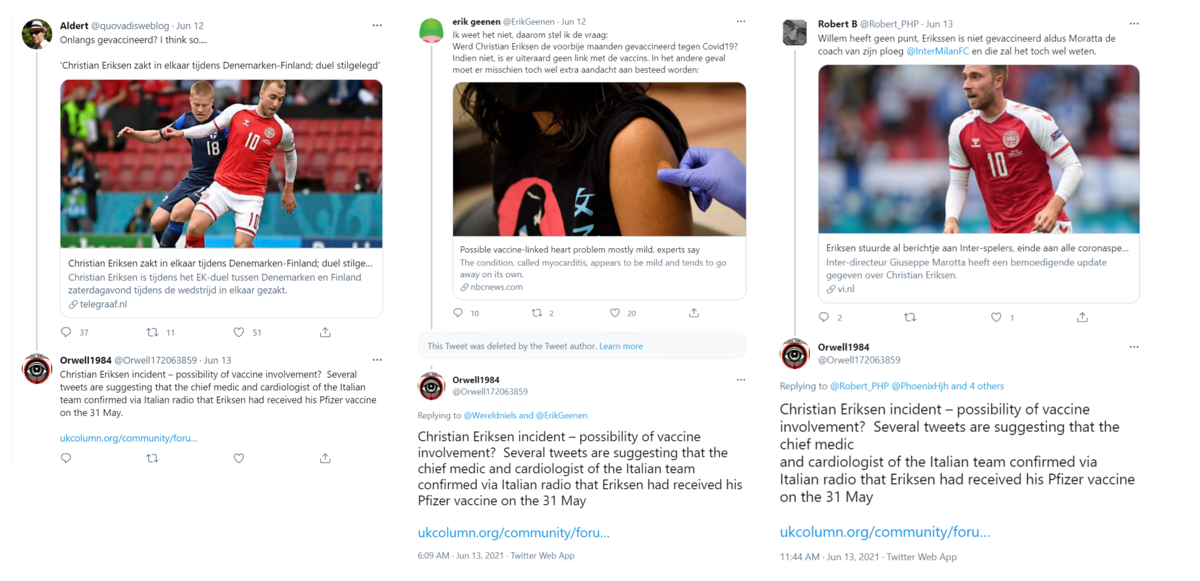

On the evening of Eriksen’s collapse, suspicious users started to share statements confirming earlier rumours, by alleging that Inter Milan’s chief medic confirmed that Eriksen had received the Pfizer vaccine in an interview with Italian Radio Sportiva. Radio Sportiva quickly denied this interview ever took place, but other anonymous trolls continued to share the claim the following day.

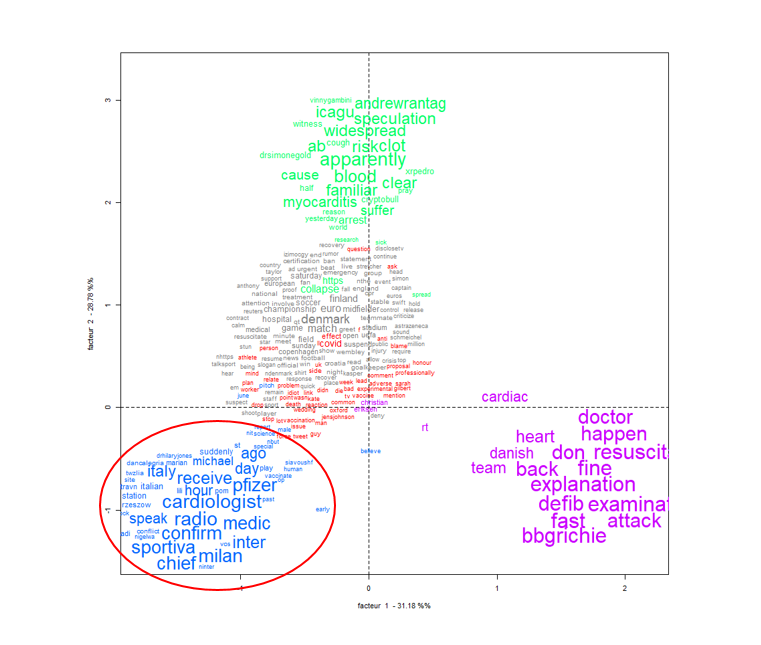

The claim quickly dominated early discussions about the collapse, forming a significant cluster in references to the keywords “Christian Eriksen” and “Vaccine” on social media.

The prominence of the rumour on social media, specifically the reference to Radio Sportiva, was quickly attributed to a group of accounts repetitively sharing the claim either as stand-alone tweets or in response to other users.

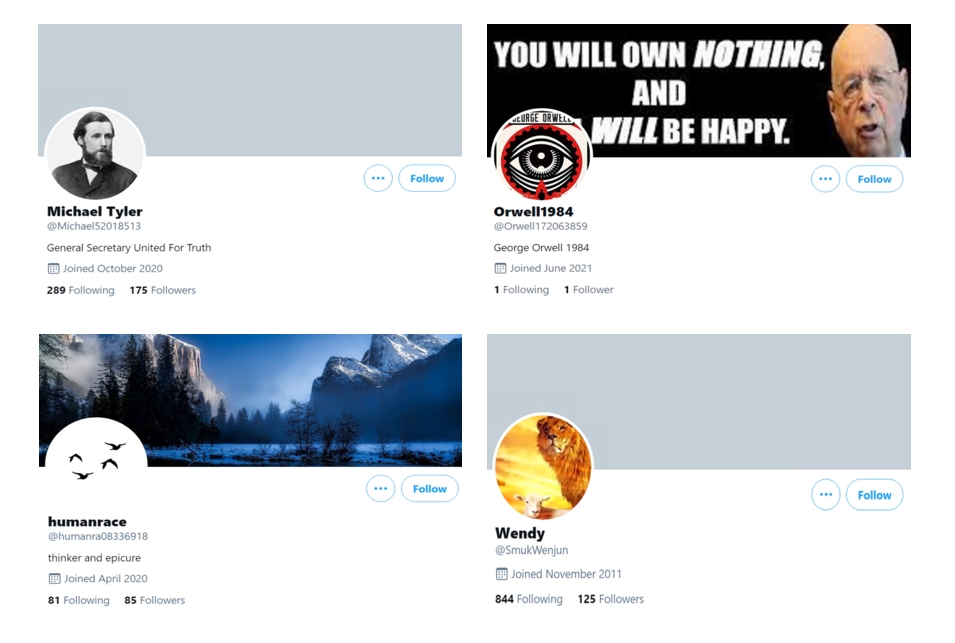

This activity was dominated by three accounts, all of which exhibited the common attributes of inauthentic bot/troll accounts as detailed in our previous posts exploring the racist trolling of Sainsburys, and the trolling of Patsy Stevenson following the Sarah Everard vigil. The most notable similarities are:

Bots, trolls, and other pseudonymous inauthentic users tend to default to long autogenerated handles when making their accounts, which they often do swiftly and in bulk.

The suspect profiles all used profile pictures which concealed their identity:

Repetitive resharing of anti-vaxx content

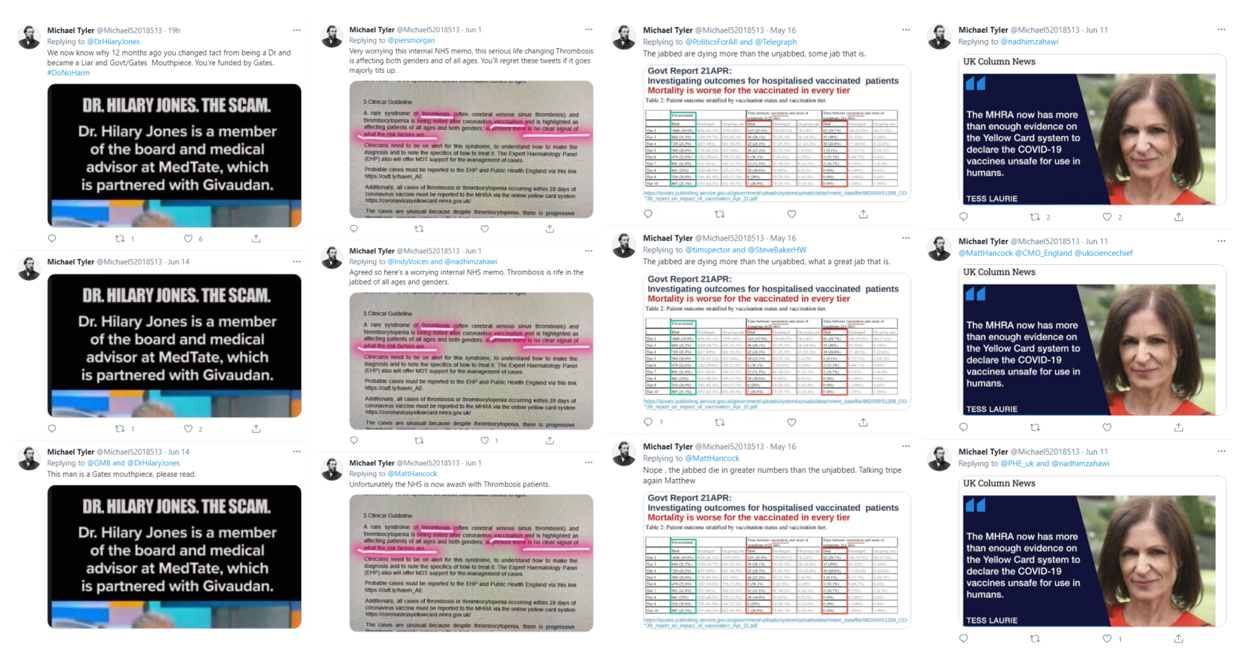

The most active account sharing disinformation about Eriksen’s collapse was Michael Tyler. The account, active since October 2020, almost exclusively shares anti-lockdown and anti-vaccination related disinformation and appears to have been created with the express purpose of harassing high-profile media figures and politicians with vaccine disinformation.

This behaviour is consistent throughout Michael Tyler’s Twitter feed. They often target the same influential users with copied statements and media. The tactics possibly indicate the user is one of many amplifier troll accounts, who have access to a directory of media and a list of designated targets.

The account was also previously implicated in a disinformation campaign on Twitter that falsely claimed French Nobel Prize-winning virologist Luc Montagnier said that people receiving any COVID-19 vaccine would die within two years.

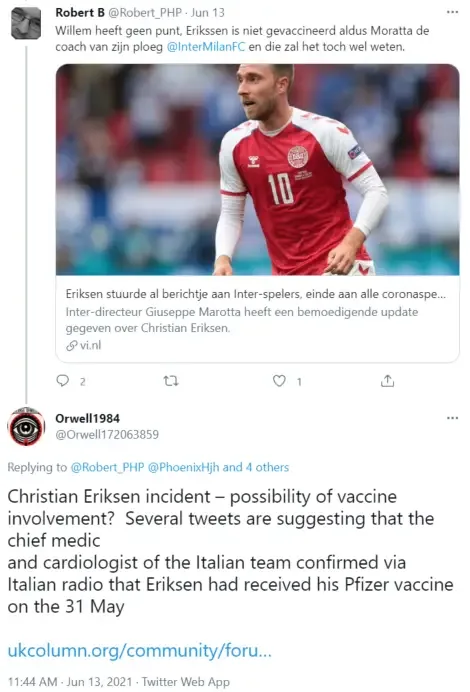

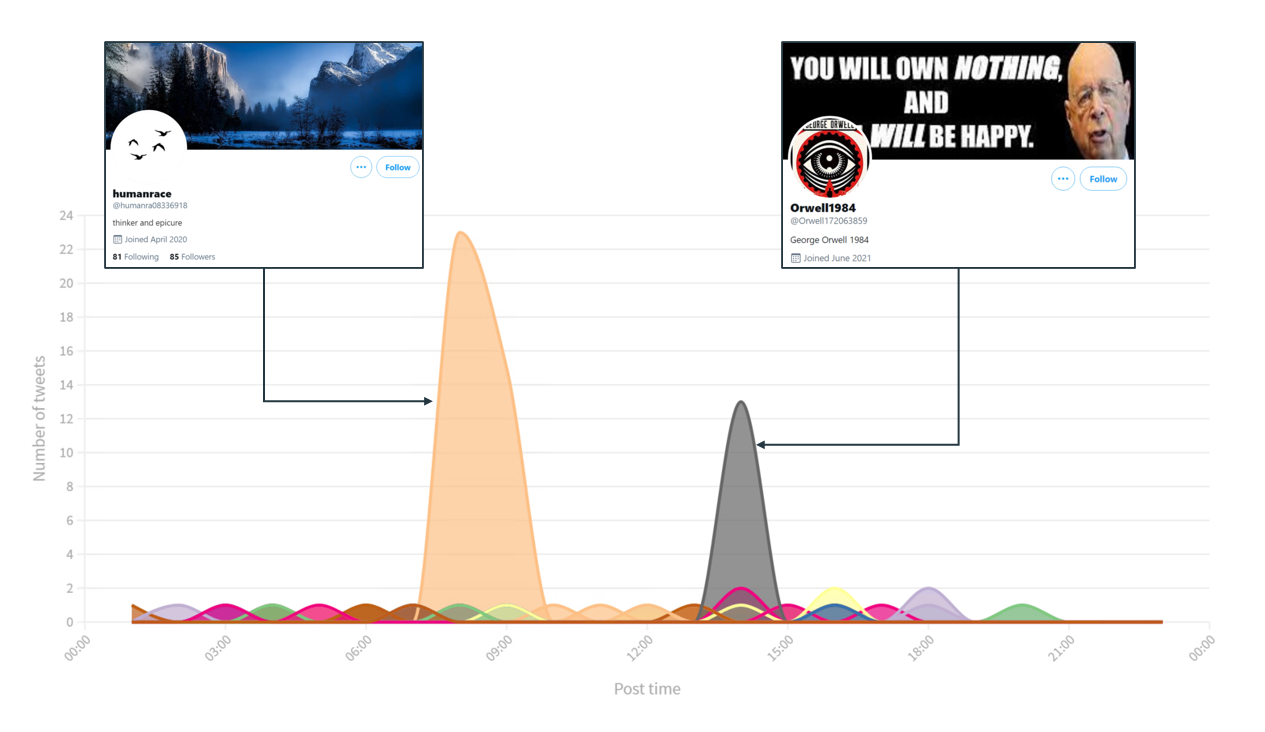

The second most influential amplifier, Orwell1984, was created on the evening of Eriksen’s collapse on 12 June and has been used exclusively to harass various high-profile Western European politicians and scientists with disinformation related to his collapse.

Most of Orwell1984’s activity consisted of sharing the same drafted Tweet linking users to a fake-news article published the American far-right conservative media outlet citizenfreepress.com. Most of these tweets were posted within one minute of each other.

Like Michael Tyler, Orwell1984 has also repetitively shared media from The UK Column, a British far-right alternative multimedia website known to publish false or misleading information and conspiracy theories regarding COVID-19, vaccines, climate-change and the New World Order.

Another user, called humanrace,has also been repetitively amplifying the Citizen Free Press article alongside Orwell1984, and the corroborating tweets posted by Michael Tyler.

Orwell1984 and humanrace make up the overwhelming majority of users resharing the fake-news article about Eriksen’s collapse on Twitter.

Outside of Twitter, PGI observed concerted efforts by authentic users to spam high-profile football pages with disinformation linking Eriksen’s collapse to a COVID-19 vaccine.

The users sharing these repeat statements had no listed or expressed interest in football. In some instances, users were commenting on news outlets from countries they did not reside in, demonstrating the potential for disinformation to reach beyond geographies and interest groups.

Equipped with this disinformation, dedicated users were emboldened to go beyond their immediate social networks and exploit the topicality of Eriksen’s collapse to spread the misleading information further. Authors of disinformation can rely on the enthusiasm of credulous audiences to inauthentically amplify their content, albeit through low-cost amateur tactics such as copy and pasting the same statement.

PGI’s findings highlight the variety of ways in which Eriksen’s collapse is being exploited on social media, and how the broader online disinformation ecosystem often consists of a range or actors acting on a spectrum of intent and authenticity.

The initial speculation about the cause of Eriksen’s collapse came from real users. However, the reach and popularity of anti-vaccination sentiment online has installed a degree of scepticism to official media narratives which encouraged users to post pre-emptive forecasts about the cause of Eriksen’s collapse.

What is interesting is that organic users attributed Eriksen’s collapse to a vaccine before coordinated threat-actors released their disinformation articles, demonstrating how fake-news content can be influenced in a bottom-up, rather than strictly a top-down capacity. The consumers of disinformation therefore have a lot more power in the creation of disinformation and in the selection of target themes.

Another interesting finding was the repetitiveness and proactivity of seemingly genuine social media users. Just because an anti-vaxx user is authentic (i.e., a real person using their own authentic social media profile), doesn’t mean their behaviour isn’t the result of a more coordinated directive. PGI observed many authentic users sharing the same anti-vaxx statements multiple times across multiple posts within a very short period.

Encrypted messaging platforms, like WhatsApp and Telegram, often host messaging lists from which vaccine sceptics obtain their information, which can be shared with instructions on what users can do to spread the word and counter pro-vaccine narratives on social media. The easiest of these strategies is to provide a quote which users can copy and paste into the comment section of high-profile accounts. In this case, the targets were football pages and news outlets covering the news of Eriksen’s collapse.

Similarly, more proactive users take to Twitter to share related fake-news stories in a similar fashion by tagging influential users in hope that their many followers will pick-up the story and amplify it themselves. Regardless of the success of this strategy, its intent is indicative of a coordinated effort to inauthentically amplify harmful disinformation. Instead of using bots or trolls, some threat actors have the benefit of having access to a large audience of real users who can share content with a lower likelihood of having their profiles or posts removed.

This case highlights the opportunism of anti-vaccination networks on social media, and the speed with which they will exploit high-profile news stories to sow doubt and promote vaccine-hesitancy.

Additionally, the findings showcase the plurality of the disinformation threat landscape on social media, in that both real users and coordinated inauthentic troll networks often overlap and interact to push the same theories. Similarly, the dominance gained by fringe alternative media outlets like UK Forum and the Citizen Free Press demonstrates the danger posed by non-state threat actors and the tools they have at their disposal to amplify their content and access the mainstream, resulting in the withering of trust in official vaccination mandates, and the promotion of discord in the civic response to the pandemic which threatens to affect the most vulnerable among us.

Subscribe to our Digital Threat Digest, insights from the PGI team into disinformation, misinformation, and online harms.

PGI’s Digital Investigations Team work with both public and private sector entities to help them understand how social media can affect their business. From high level assessments of the risks of disinformation to electoral integrity in central Africa to deep dives into specific state-sponsored activity in eastern Europe we have applied our in-house capability globally.

Contact us to talk about your requirements.

Social engineering attacks are on the rise and small and medium enterprises (SMEs) are especially vulnerable targets, often having more limited security resources and less mature defences that are easier to bypass.

Last week, the WeProtect Global Alliance launched their flagship biennial report, the Global Threat Assessment 2025 (GTA25).

If you’re considering an automated threat intelligence service, it’s important to first weigh up the benefits and limitations against the level of security your business needs.