Emerging threats

We support organisations striving to build a trustworthy, safe online environment where users can engage authentically in their communities.

Cross-sector corporatesWe support international government organisations and NGOs working to provide infrastructure or improve the capabilities, security and resilience of their nation.

International programmes and developmentWe support commercial organisations operating in a digital world, seeking to protect their reputation and prevent business disruption caused by cyber attacks and compliance breaches.

UK government and public sectorWe support UK government organisations responsible for safeguarding critical infrastructure, preserving public trust, and maintaining national security.

Racism on social media is a pervasive issue and while organic statements of prejudice are commonly expressed on platforms like Twitter, some groups and individuals are leveraging the algorithmic architecture of social media to amplify their hostile beliefs. They then use it as an opportunity to target companies while derailing their marketing and communication efforts.

Posts of influential figures and businesses on social media provide a wealth of opportunities for antagonistic users to broadcast controversial statements—through a low-cost strategy—which are likely to provoke backlash and inflame pre-existing political tensions. A recent and highly publicised example of a corporation falling victim to this kind of opportunism came during Christmas 2020 with one of the UK’s leading supermarkets, Sainsbury’s.

On 14 November 2020, Sainsbury’s published the first of their Christmas adverts, titled ‘Gravy Song’—containing home-styled footage of a British family enjoying Christmas together—on their Twitter feed (followed by over 580,000 people at the time).

The advert was very swiftly marred in a bitter social media backlash when some Twitter users expressed their disapproval of the advert, citing the ethnicity of the family in the footage as proof that Sainsbury’s was pandering to ‘PC Culture’ or ‘racial exclusionism’. The advert garnered 3.2 million views on Twitter alone.

This response to the advert became the dominating feature of its broader coverage in the media with news outlets attributing the backlash to a dissatisfied customer base – linked to wider issues such as ethnic diversity. The advert—or rather its representation following the backlash—served to inflame existing political fault lines and amplified a group of racist grievances to a disproportionately large audience.

Initial media reports gave the impression that the racist responses were both widespread and authentic. However, on investigation, these comments paled in comparison to the vast majority of responses, which were positive. After the racist comments appeared, much of the Twitter thread was dominated by other users denouncing the accounts who made them.

Upon closer inspection, PGI discovered that most of the opposition to the advert, while no less offensive, came from a group of inauthentic hyper-partisan trolls, who exhibited a broader pattern of extremist provocation on Twitter in which they exploit an array of topical stories to broadcast hate-speech and disinformation. These Twitter users weren’t Sainsbury’s authentic customer base but by seeding racist remarks into the comment section of the Christmas advert, the trolls gained more amplification than they could ever have hoped to achieve by themselves through their own networks – to the extent that the activity of the trolls became the defining feature of Sainsbury’s narrative.

An inauthentic user is not an automated account like a bot, but rather an anonymous user who is committed to extremist ideologies and to broadcasting them by any means possible; to a degree of dedication that is uncharacteristic of the wider public and other, authentic, social media users.

While this is not to say that the racist sentiment is any less genuine, it does highlight a degree of opportunism and coordination that sets it apart from more isolated examples of hate-speech on social media. Far from suggesting that its concerted nature is not problematic, it does help to shape the way in which corporations and news outlets can respond to this kind of harassment and not play into the inauthentic signposting of racist statements online.

Over 60 articles covering the backlash were published in the week following the advert’s release, broadcasting it across global news networks. Despite originating on Twitter, the racist comments’ amplification through mainstream media coverage further catapulted the sentiments to even broader social media audiences on other platforms, including Facebook, Instagram, Reddit and LinkedIn. Stories of the advert’s backlash reached an estimated web audience of nearly 250 million people within 48 hours of its publication.

With each piece of coverage, shared to the outlet’s respective social media pages, the story continued to amass hundreds of thousands of comments, leading to similar racist criticism of the advert. Successfully bringing these sentiments to the public attention derailed the company’s message.

To better understand this case, PGI analysed the Twitter users posting these racist comments and found that some exhibited characteristics of inauthentic hyper-partisan trolls.

The profiles exhibited several red flags associated with inauthentic troll accounts, including:

It was noted that many of the trolls identified by PGI reshared and commented on the same tweets, despite not following each other. And users frequently amplified the polarising statements from extreme right-wing influencers from the UK and US.

PGI collected all of these users’ tweets and used a variety of Natural Language Processing (NLP) methods to conduct a thematic analysis of their timeline content.

The suspect accounts posting racist remarks in response to the Sainsbury’s advert displayed a wider pattern of almost exclusively tweeting and resharing using wedge issues, like Brexit and immigration, to attack and harass opponents.

The analysis also revealed that the handles of political figures and news outlets often co-occurred with swear words, demonstrating the accounts’ history of hostile and confrontational harassment on social media with a clear goal of disrupting positive messaging.

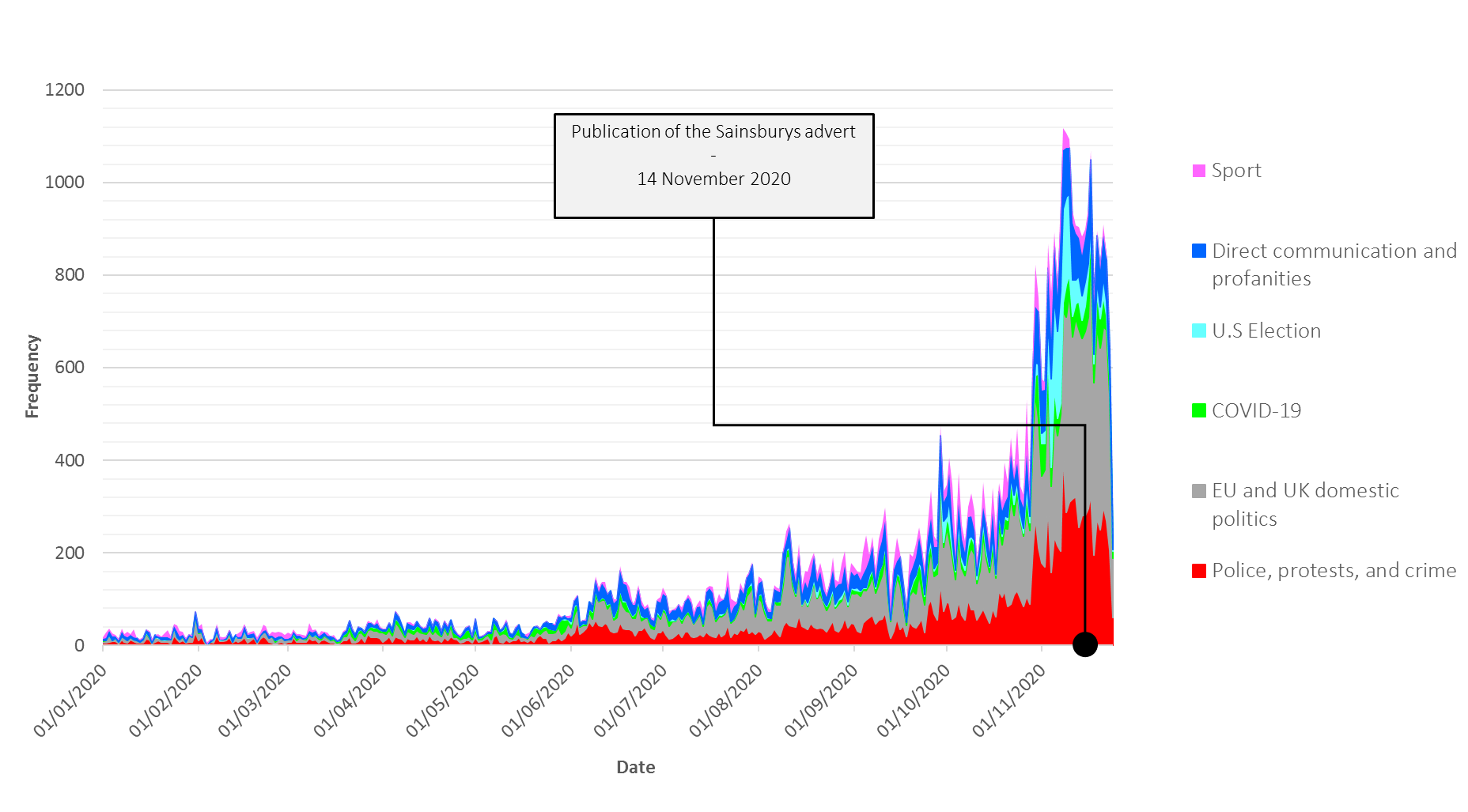

When plotting the thematic references in the users’ themes over time, we can observe a gradual increase in tweeting activity since June 2020, before rising sharply after October. All profiles gradually scaled-up their hyper-partisan efforts prior to and immediately after they commented on the Sainsbury’s advert, suggesting that their comments on the advert were part of a much larger malign trolling campaign across Twitter – with Sainsbury’s a victim.

The continued growth of political references immediately after they commented on the Sainsbury’s ad suggested that the users were not deterred by the backlash, and instead used the media spotlight as an opportunity to continue spreading hyper-partisan content to the broader audience afforded by their increased public scrutiny.

It is essential to be able to differentiate sporadic and isolated prejudice from more concerted influence operations. Both instances, though equally concerning, have different origins, and therefore have different appropriate solutions.

For cases like the Sainsbury’s trolling, knowledge of whether the backlash is reactive or—as PGI found, part of a broader network—could make the difference between a concession to or resilience against social media harassment and keeping control of a narrative. PGI has developed a methodology for identifying, mapping, and attributing malicious social media campaigns based on our vast experience of threat intelligence and countering malign influence operations.

In order to identify when racism on social media is either isolated and organic—or contrastingly coordinated and inauthentic—a number of factors must be considered, including:

Evidence from each stage of analysis is then synthesised to create a profile of the accounts involved, their posting patterns, and areas of focus. This helps us to evaluate the degree of threat, coordination, and authenticity posed by an account or group of accounts. Additionally, this data is used to attribute the possible owners of an account where possible.

Equipped with this knowledge, corporations can appropriately tailor their responses to fit the threat they are faced with, be it the genuine grievances of their loyal clientele or the opportunistic harassment by provocative trolls – and take the proportionate action as needed.

Understanding who you are dealing with allows you to maintain control of your brand’s narrative and keeps you ahead of the media response – encouraging the use of appropriate language by media commentators. If hostile backlash is coordinated or inauthentic, like that experienced by Sainsbury’s, this kind of insight would help inform responsible communication that doesn’t inadvertently feed into the troll’s aspirations and ultimately keeps the company’s message on point.

Subscribe to our Digital Threat Digest, insights from the PGI team into disinformation, misinformation, and online harms.

PGI’s Digital Investigations Team work with both public and private sector entities to help them understand how social media can affect their business. From high level assessments of the risks of disinformation to electoral integrity in central Africa to deep dives into specific state-sponsored activity in eastern Europe we have applied our in-house capability globally.

Contact us to talk about your requirements.

Social engineering attacks are on the rise and small and medium enterprises (SMEs) are especially vulnerable targets, often having more limited security resources and less mature defences that are easier to bypass.

Last week, the WeProtect Global Alliance launched their flagship biennial report, the Global Threat Assessment 2025 (GTA25).

If you’re considering an automated threat intelligence service, it’s important to first weigh up the benefits and limitations against the level of security your business needs.